For businesses integrating AI across touchpoints, few challenges are as frustrating as “hallucinations” in AI-generated responses. Imagine a situation where your AI agent, when asked a specific customer query, provides a misleading or nonsensical response. The result? Delayed issue resolution, customer frustration, and wasted time.

This phenomenon, where generative AI models produce factually incorrect answers, is known as hallucination. According to a Forrester study, nearly 50% of decision-makers believe that these hallucinations prevent broader AI adoption in enterprises. In this blog, we’ll understand what AI hallucinations are, what causes them, the types that exist, and actionable steps to overcome them—supporting more accurate, reliable AI usage in business.

What are Generative AI Hallucinations?

AI hallucinations refer to instances where an AI model generates misleading, incorrect, or completely nonsensical responses that don’t match the input context/query. This can happen even in well-trained AI models, especially when asked to answer complex questions with limited data or understanding.

For example, an AI support agent might be asked about a specific product feature. Instead of accurately answering, it might confidently offer incorrect details, leading to customer confusion. Hallucinations in AI arise from the way large language models (LLMs) are trained—they draw from vast datasets that may contain conflicting information, and in some cases, the model “fills in gaps” with fabricated details.

Types of Gen-AI Hallucinations

Hallucinations in generative AI models and LLMs can be broadly categorized based on cause and intent:

1. Intentional Hallucinations:

Intentional hallucinations occur when malicious actors purposefully inject incorrect or harmful data, often in adversarial attacks aimed at manipulating AI systems. In cybersecurity contexts, for example, adversarial entities may manipulate AI systems to alter output, posing risks in industries where accuracy and trust are critical.

2. Unintentional Hallucinations:

Unintentional hallucinations arise from the AI model’s inherent limitations. Since LLMs are trained on vast, often unlabeled datasets, they may generate incorrect or conflicting answers when faced with ambiguous questions. This issue is further compounded in encoder-decoder architectures, where the model attempts to interpret nuanced language but may misfire, creating answers that appear plausible but are incorrect.

What Causes Gen-AI Models or LLMs to Hallucinate?

Understanding the causes of hallucinations can help mitigate them effectively. Here are some primary reasons AI models may hallucinate:

- Data Quality Issues: The training data used to develop LLMs isn’t always reliable or comprehensive. Incomplete, biased, or conflicting data can contribute to hallucinations.

- Complexity of LLMs: Large models like GPT-4 or other advanced LLMs can generate responses based on associations and patterns rather than factual accuracy, leading to “invented” answers when the input is unclear.

- Interpretation Gaps: Cultural contexts, industry-specific terminology, and language nuances can confuse AI models, leading to incorrect responses. This is especially relevant in customer service, where responses need precision.

Hallucinations in LLMs remain a barrier to enterprise-wide AI adoption, but several steps can help reduce their occurrence.

The Consequences of Gen-AI Hallucinations

AI hallucinations can create serious real-world challenges, impacting both customer experience and enterprise operations:

- Customer Dissatisfaction & Trust Issues: When an AI agent provides inaccurate information, it can frustrate customers, eroding trust in the company. For example, in a customer service setting, a hallucinatory response to a billing question might give the wrong figures, leading to confusion and complaints.

- Spread of Misinformation: Hallucinating AI in areas like news distribution or customer updates can unintentionally spread misinformation. For instance, if an AI system in a public safety context provides inaccurate data during a crisis, it could contribute to unnecessary panic or misdirected resources.

- Security Vulnerabilities: AI systems are also susceptible to adversarial attacks, where bad actors tweak inputs to manipulate AI outputs. In sensitive applications like cybersecurity, these attacks could be exploited to generate misleading responses, risking data integrity and system security.

- Bias Amplification and Legal Risks: Hallucinations can stem from biases embedded in training data, causing the AI to reinforce or exaggerate these biases in its outputs. This is particularly concerning in sectors like finance or healthcare, where incorrect information can lead to legal complications, misdiagnosis, or financial discrimination.

7 Effective Ways to Prevent AI Hallucinations

Enterprises can take several steps to minimize hallucinations in AI agents, enhancing reliability and accuracy:

Use High-Quality Training Data/Knowledge Base That Covers All Bases:

The foundation of accurate AI models is high-quality, diverse training data. Training on well-curated and balanced data helps minimize hallucinations by providing the model with comprehensive, relevant information. This is especially vital in sectors like healthcare or finance, where even minor inaccuracies can have serious consequences.

Define the AI Model’s Purpose With Clarity:

Setting a clear, specific purpose for the AI model helps reduce unnecessary “creativity” in responses. When the model understands its core function, such as customer support or sales recommendations, it becomes more focused on delivering accurate responses within that domain. For instance, specific instructions can be defined for the AI agents, such as: “If a query cannot be answered from the given context, the bot should intelligently deny the user.”

This approach ensures the bot prioritizes issue resolution and avoids speculative answers, maintaining accuracy and trustworthiness in interactions.

Limit Potential Responses:

By constraining the scope of responses, organizations can reduce the chance of hallucinations, especially in high-stakes applications. Defining boundaries for AI responses, such as using predefined answers for specific types of inquiries, helps maintain consistency and avoids the risk of unpredictable outputs.

Use Pre-tailored Data Templates:

Pre-designed data templates provide a structured input format, guiding the AI to generate consistent and accurate responses. By working within predefined structures, the model has less room to wander into incorrect outputs, making templates particularly valuable in sectors requiring a high degree of response accuracy.

Assess & Optimize the System Continuously

Regular testing, monitoring, and fine-tuning are critical to maintaining the model’s alignment with real-world expectations. Continuous optimization helps the AI adapt to new data, detect inaccuracies early on, and sustain accuracy over time.

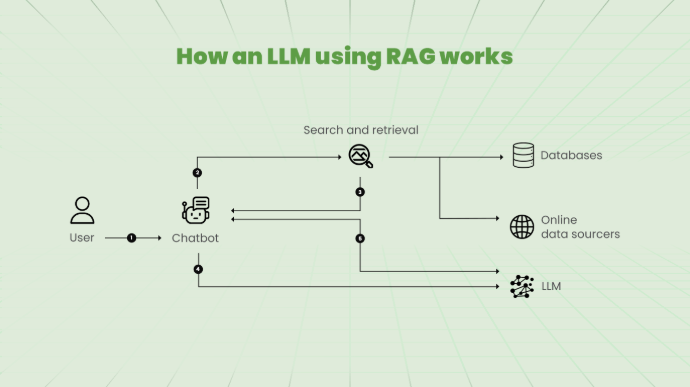

Use RAG for Optimal Performance

Retrieval-augmented generation (RAG) integrates external, verified data sources into the response-generation process, grounding the model’s answers with real, referenceable information. By anchoring responses in verified data, RAG helps prevent the AI from generating unsubstantiated or hallucinatory answers.

Count on Human Oversight

Human oversight provides an essential layer of quality control. Skilled reviewers can catch and correct hallucinations early, especially in the initial training and monitoring stages. This involvement ensures that AI-generated content aligns with organizational standards and relevant expertise.

These strategies collectively create a more dependable AI model, minimizing hallucinations and enhancing user trust across applications.

How We at Ori Overcome AI Hallucinations with Precision

To recap, hallucinations in generative AI can hinder adoption, mislead customers, and create operational challenges. However, through high-quality data, targeted optimizations, and human oversight, companies can achieve reliable, hallucination-free AI deployment.

At Ori, we go beyond standard monitoring by using post-call speech analytics to identify any signs of hallucination. Our approach tracks every response from our AI agents, ensuring that even the slightest inaccuracies are detected. Moreover, we leverage customer sentiment analysis to better adapt responses to customer needs, optimizing accuracy and user satisfaction.

With Ori’s solution, AI agents evolve continuously, maintaining a low hallucination rate of 0.5%-1%—ensuring that 99% of responses are accurate. So if you are a decision-maker looking for reliable AI that adapts to your real-world needs, schedule a demo with our experts and learn how our advanced Gen-AI solutions can deliver precise, customer-focused automation across touchpoints in your business.

Leave a Reply